Compositionality, Prompts and Causality

Morning, Jun 18th, Sun, 2023 CVPR

Overview

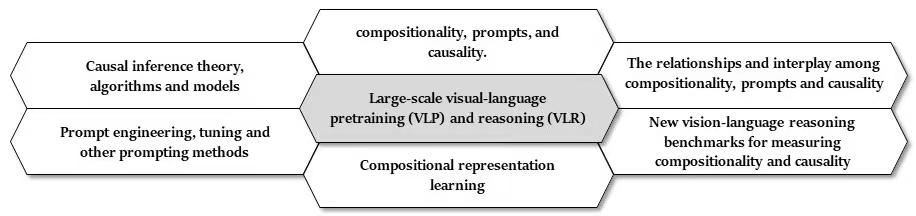

Recent years have seen the stunning powers of Visual Language Pre-training (VLP) models. Although VLPs have revolutionalized some fundamental principles of visual language reasoning (VLR), the other remaining problems prevent them from “thinking” like a human being: how to reason the world from breaking into parts (compositionality), how to achieve the generalization towards novel concepts provided a glimpse of demonstrations in context (prompts), and how to debias visual language reasoning by imagining what would have happened in the counterfactual scenarios (causality).

The workshop provides the opportunity to gather researchers from different fields to review the technology trends of the three lines, to better endow VLPs with these reasoning abilities. Our workshop also consists of two multi-modal reasoning challenges under the backgrounds of cross-modal math-word calculation and proving problems. The challenges are practical and highly involved with our issues, therefore, shedding more insights into the new frontiers of visual language reasoning.

Topics

Location and Time

Sun Jun 18th at the Vancouver Convention Center (Room East-9, from 9:15 am to 5:00 pm)

Program

- 9:15 am-9:30 am Openning

- 9:30 am-10:15 am Keynote speaker (Hanwang Zhang)

- 10:15 am-11:00 am Keynote speaker (Elias Bareinboim, virtual attendance)

- 11:00 am-11:45 am Keynote speaker (Anna Rohrbach)

- 11:45 am-12:30 pm Keynote speaker (Zeynep Akata, virtual attendance)

- 12:30 pm-2:15 pm Lunch break

- 2:15 pm-3:00 pm Keynote speaker (Alan Yuille)

- 3:00 pm-3:45 pm Keynote speaker (Ziwei Liu)

- 3:45 pm-4:30 pm Keynote speaker (Ranjay Krishna)

- 4:30 pm-5:30 pm Closing remarks and poster session

Speakers

Call for Papers

This workshop pays more attention to their limitations of existing notions on Compositionality, Prompts, and Causality. In the sense of visual language reasoning, compositionality demonstrates how human represents and organizes visual events for reasoning better; prompt-based methods build a cross-modal bridge to leverage a large language model for reasoning on new visual concepts in a vocabulary; and causality prevents the chain of reasoning from spurious features and other confounding factors. They are very important whereas have seldom been investigated together and worked out in VLPs, covering a non-exhaustive list of topics such as:

- Large-scale visual-language pretraining (VLP) models, visual-language representation learning and reasoning (VLR) (Especially, how VLP and VLR are connected with compositionality, prompts and causality);

- Causal inference theory, algorithms and models applied for vision, language, and vision-language area;

- Prompt engineering, tuning and other prompting methods for vision, language, and vision-language area;

- Compositional representation learning for vision, language, and vision-language area;

- The relationships and interplay among compositionality, prompts and causality;

- New benchmarks that evaluate vision-language reasoning in terms of compositionality and causality

Our submission includes the regular-paper track and the long-paper track. CVPR officially does not

allow regular workshop papers with more than 4 pages. To this end, our long-paper accepts the

submitted manuscripts with more than 4 pages, and supplementary materials are also allowed in the

long-paper track. Regular papers are submitted via the CMT paper submission website,

Regular-paper track: https://cmt3.research.microsoft.com/NFVLR2023

| Workshop Event | Date |

|---|---|

| Submission Deadline | April 10, 2023 (11:59PM EDT) |

| Notification of Acceptance | April 12, 2023 (11:59PM EDT) |

| Camera-Ready Submission Deadline Due | April 14, 2023 (11:59PM EDT) |

Long-paper track:

https://openreview.net/group?id=thecvf.com/CVPR/2023/Workshop/NFVLR

Long papers are submitted via the openreview system. Note that, only regular papers would be

selected to publish in IEEE Xplore and the CVF open-access archive.

| Workshop Event | Date |

|---|---|

| Submission Deadline | May 24, 2023 (Extended) |

| Notification of Acceptance | June 5, 2023 (Extended) |

| Camera-Ready Submission Deadline Due | June 8, 2023 (Extended) |